This post will walk you through a simplified PKS (Pivotal

Container Service) deployment in my lab. The reason why I say this is

simplified is because all the components will be deployed on a single flat

network. PKS has several network dependencies. These include the bosh agents

deployed on the Kubernetes (K8s) VMs being able to reach the BOSH Director, as

well as the vCenter server. Let’s not get too deep into the components just yet

– these will be explained over the course of the post. So rather than trying to

set up routing between multiple different networks, I have deployed everything

on a single flat network. Again, like some of my previous posts, this is more

of a learning exercise than a best practice. What it will show you are the

various moving parts that need to be considered when deploying your own PKS. If

you are looking for a more complex network deployment, have a read of the

excellent series of posts that my good pal William Lam put together. He

highlights all the steps needed to get PKS deployed with NSX-T. I will be

referencing William’s blogs from time to time as they contain some very useful

snippets of information. Note that this is going to be quite a long post as

there are a lot of pieces to deploy to get to the point where a K8s cluster can

be rolled out.

If you want to learn more about what PKS is about, have a read

of this blog post where I discuss the PKS announcement from VMworld 2017.

1. Networking overview

Let’s begin by having

a quick look at the various components and how they are connected. I put

together this diagram to assist with understanding the network dependencies.

To get started, the Pivotal Ops Manager is

deployed. This needs to be able to communicate with your vSphere environment.

This is responsible for deploying the BOSH Director component and then the

Pivotal Container Service (PKS). Once these are deployed, a PKS client VM is

set up which contains the UAA (user account and authorization) and PKS command

line extensions. These are used to get the correct privileges to roll out the

Kubernetes cluster(s). As I mentioned in the opening paragraph, the BOSH agents

on the K8s nodes need to reach back to the BOSH director to pull down

appropriate packages, as well as reach back to the vSphere environment to

request the creation of persistent disks for the master and workers. While this

makes the integration with NSX-T really useful, I wanted just look at the steps

involved without having NSX-T in the mix. And because I am restricted in my own

lab environment, I went with a single flat network as follows:

Now that we know what

the networking looks like, let’s go ahead and check on what components we need

to deploy it.

2. PKS Components

We already mentioned that we will need the Pivotal Ops

Manager. This is an OVF which, once deployed, can be used to deploy the

BOSH Directory and PKS, the Pivotal Container Service.

We will then need to deploy the necessary components on a VM

which we can refer to as the PKS Client. The tools we need to install in this

VM are the UAA CLI (for user authentication), the PKS CLI

(for creating K8s clusters) and then finally the Kubectl CLI

(which provides a CLI interface to manage our K8s cluster).

This is all we need,

and with this infrastructure in place, we will be able to deploy K8s clusters

via PKS.

3. Deploying Pivotal

Ops Manager

This is a straight-forward OVF deploy. You can download

the Pivotal Cloud Foundry Ops Manager

for vSphere by clicking here. The preference is to use a static IP

address and/or FQDN for the Ops Manager. Once deployed, open a browser to the

Ops Manager, and you will be presented with an Authentication System to select

as follows:

I used “Internal

Authentication”. You will then need to populate password fields and agree to

the terms and conditions. Once the details are submitted, you will be presented

with the login page where you can now login to Ops Manager. The landing page

will look something like this, where we see the BOSH Director tile for vSphere.

BOSH is basically a deployment mechanism for deploying Pivotal software on

various platforms. In this example, we are deploying on VMware vSphere, so now

we need to populate a manifest file with all of our vSphere details. To

proceed, we simply click on the tile below. Orange means that it is not yet

populated; green means that is has been populated.

4. Configuring BOSH

Director for vSphere

The first set of

details that we need to populate are related to vCenter. As well as

credentials, you also need to provide the name of your data center, a datastore

and whether you are using standard vSphere network (which is what I am using)

or NSX. Here is an example taken from my environment.

The next screen is the

Director configuration. There are only a handful of items to add here. The

first is an NTP server. The others are check-boxes, shown below. The

interesting one is the “Enable Post Deploy Scripts”. If this is enabled, BOSH

will automatically deploy K8s applications on your Kubernetes cluster

immediately after the cluster is deployed. This includes the K8s dashboard. If

this check box is not checked, then you will not have any applications deployed

and the cluster will be idle after it is deployed.

This brings us to

Availability Zones (AZ). This is where you can define multiple pools of vSphere

resources that are associated with a network. When a K8s cluster is deployed,

one can select a particular network for the K8s cluster, and in turn the

resources associated with the AZ. Since I am going with one large flat network,

I will also create a single AZ which is the whole of my cluster.

Now we come to the

creation of a network and the assigning of my AZ to that network. I am just

going to create two networks, one for my BOSH Director and PKS and another for

K8s. But these will be on the same segment since everything is going to sit on

one flat network. And this is the same network that my vCenter server resides

on. As William also points out in his blog, the Reserved IP Ranges need some

explaining. This entry defines which IP addresses are already in use in the

network. So basically, you are blocking out IP ranges from BOSH and K8s, and

anything that is not defined can be consumed by BOSH and K8s. In effect, for

BOSH, we will required 2 IP address to be free – the first for BOSH Director

and the second for PKS which will deploy shortly. So we will need to block all

IP addresses except for the two we want to use. For K8s, we will require 4 IP

addresses for each cluster we deploy, one for the master and 3 workers. So

block all IP addresses except for the 4 you want to use. This is what my setup

looks like, with my 2 networks:

Finally, we associate

an Availability Zone and a Network with the BOSH Director. I chose the network

with 2 free IP addresses created previously, and my AZ is basically the whole

of my vSphere cluster, also created previously.

I can now return to my

installation dashboard (top left hand corner in the above screenshot), and see

that my BOSH Director tile has now turned green, meaning it has been

configured. I can see on the right hand side that there are “Pending Changes”

which is to install the BOSH Director. If I click on Apply changes, this will

start the roll out of my BOSH Director VM in vSphere.

You can track the

progress of the deployment by clicking on the Verbose Output link in the top

right hand corner:

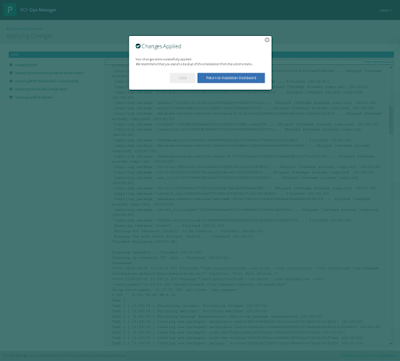

And if everything is

successful, you will hopefully see a deployment message to highlight that the

changes were successfully deployed.

You should now be able

to see your BOSH Director VM in vSphere. The custom attributes of the VM should

reveal that it is a BOSH director. That now completes the deployment of the

BOSH director. We can now turn our attention to PKS.

5. Adding the PKS tile

and necessary stem cell

Now we install the Pivotal Container Service. This will create a

new tile once imported. We then add a “stemcell” to this tile. A “stemcell”

is a customized operating system image containing the filesystem for

BOSH-managed virtual machines. For all intents and purposes, vSphere

admins can think of this as a template. PKS can be downloaded from this

location. On the same download page, you will notice that there are

a list of available stemcells. The stemcell version needs to match the PKS

version. The required version will be obvious when PKS is deployed.

To begin the deploy,

click on “Import a Product” on the left hand side of the Ops Manager UI. Browse

to the PKS download, and if the import is successful, you will see the PKS

product available on the left hand side as well, along with the version.

Click on the + sign to

add the PKS tile, and it will appear in an orange un-configured state, as shown

below.

You will also notice

that this tile requires a stemcell, as highlighted in red in the PKS tile.

Click on this to import the tile.

Here you can also see the required version of stemcell (3468.21)

so this is the one that you need to download from Pivotal. This will be

different depending on the version of PKS that you choose. From the PKS

download page, if we click on the 3468.21 release, we get taken to this

download page where we can pick up the Ubuntu Trusty Stemcell for vSphere

3468.21. Once the stemcell is imported and applied, we can return to

the Installation Dashboard and start to populate the configuration information

needed for PKS by clicking on the tile.

6. Configure PKS

The first

configuration item is Availability Zones and Networks. As I have only a single

AZ and a single flat network, that is easy. For the Network selection, the

first network placement is for the PKS VM and the second is for the K8s VMs. I

will place PKS on the same network as my BOSH Director, and the Service Network

will be assigned the network with the 4 free IP address (for master and 3

workers). Now in my flat network setup, these are all on the same segment and

VLAN, but in production environments, these will most likely be on separate

segments/VLANs, as shown in my first network diagram. If that is the case, then

you will have to make sure that the Service Network has a route back to the

BOSH Director and PKS VMs, as well as your vCenter server and ESXi hosts. In a

future blog, I’ll talk about network dependencies and the sort of deployment

issues you will see if these are not correct.

The next step is to configure access to the PKS API. This will

be used later when we setup a PKS client VM with various CLI components. This

certificate will be generated based on a domain, which in my case is rainpole.com.

Populate the domain, then click generate:

And once generated,

click on Save.

Next step is to populate the Plans. These decide the resources

assigned to the VMs which are deployed when your Kubernetes clusters are

created. You will see later how to select a particular plan when

we create a K8s cluster. These can be left at the default settings; the only

step in each of the plans is to select the Availability Zone. Once that is

done, save the plans. Plan 1 is small, Plan 2 is medium. Plan 3 (large) can be

left inactive.

This brings us to the K8s Cloud Provider. Regular readers of

this blog might remember posts regarding Project Hatchway, which is a VMware initiative to provider

persistent storage for containers. PKS is leveraging this technology to provide

“volumes” to cloud native applications running in K8s. This has to be able to

communicate with vSphere, so this is where these details are added. You will

also need to provide a datastore name as a VM folder. I matched these exactly

with the settings in the vCenter configuration for the BOSH Director. I’m not

sure if this is necessary (probably not) but I didn’t experience any issues reusing

them here for PKS.

Networking can be left

at the default of Flannel rather than selecting NSX.

The final configuration step in the PKS tile is the UAA setting.

This is the user account and authorization part, and this is how we will manage

the PKS environment, and basically will define who can manage and deploy K8s

clusters. This takes a DNS entry and once PKS is deployed, that DNS entry will

need to point to the PKS VM, once it is deployed. I used uaa.rainpole.com.

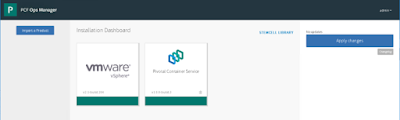

If we now return to

the installation dashboard, we should once again see a set of pending changes,

including changes to BOSH Director and the installation of Pivotal Container

Service (PKS). Click on Apply changes as before. The changes can be tracked via

the verbose output link as highlighted previously.

If the changes are

successful, the deployment dashboard should now look something like this:

So far so good. The

next step is to set up a PKS client with the appropriate CLI tools so that we

can now go ahead and roll out K8s clusters.

7. Configuration a PKS

Client

I’m not going to spend too much time on the details here. William already does a great job on how to deploy the various components

(uaac, pks and kubectl) on an Ubuntu VM in his blog post here. If

you’d rather not use Ubuntu, we already saw where the CLI components can be

downloaded previously in this post. The CLI components are in the same download

location as PKS. When the components

are installed, it would now be a good time to do your first DNS update. You

will need to add uaa.rainpole.com to your DNS to match the

same IP as the PKS VM (or add it to the PKS client /etc/hosts file).

8. Deploy your first

K8s cluster

First step is to retrieve the secret token for your UAA Admin.

Select the Pivotal Container Service tile in Pivotal Ops Manager, then select

the credentials tab and then click on the Link to Credential. Here you will

find the secret needed to allow us to create an admin

user that can then be used to create K8s clusters via PKS. In the final

command, we include the role “pks.clusters.admin” which will give us full admin

rights to all PKS clusters.

·

uaac target

https://uaa.rainpole.com:8443 –skip-ssl-validation

·

uaac token client get

admin -s

·

uaac user add admin

–emails admin@rainpole.com -p

·

uaac member add

pks.clusters.admin admin

Before we create our

K8s cluster, there is another very useful set of bosh CLI commands. In order to

run these commands however, we need to authenticate against our Pivotal

Operations Manager. Here are the two “om” (short for ops manager) commands to

do that (you will replace the pivotal-ops-mgr.rainpole.com with your own ops

manager):

root@pks-cli:~# om

–target https://pivotal-ops-mgr.rainpole.com-u admin -p -k curl -p

/api/v0/certificate_authorities | jq -r ‘.certificate_authorities |

select(map(.active == true))[0] | .cert_pem’ > /root/opsmanager.pem

Status: 200 OK

Cache-Control:

no-cache, no-store

Connection: keep-alive

Content-Type:

application/json; charset=utf-8

Date: Mon, 16 Apr 2018

10:17:31 GMT

Expires: Fri, 01 Jan

1990 00:00:00 GMT

Pragma: no-cache

Server: nginx/1.4.6

(Ubuntu)

Strict-Transport-Security:

max-age=15552000

X-Content-Type-Options:

nosniff

X-Frame-Options:

SAMEORIGIN

X-Request-Id:

8dea4ce9-a1d5-4b73-b673-fadd439d4689

X-Runtime: 0.034777

X-Xss-Protection: 1;

mode=block

root@pks-cli:~# om

–target https://pivotal-ops-mgr.rainpole.com-u admin -p -k curl -p

/api/v0/deployed/director/credentials/bosh2_commandline_credentials -s | jq -r

‘.credential’

BOSH_CLIENT=ops_manager

BOSH_CLIENT_SECRET=DiNXMj11uyC9alp3KJGOMO5xyATCg—F

BOSH_CA_CERT=/var/tempest/workspaces/default/root_ca_certificate

BOSH_ENVIRONMENT=10.27.51.177

Ignore the BOSH_CA_CERT output, but take the rest of the output

from the command and update your ~/.bash_profile. Add the following

entries:

·

export BOSH_CLIENT=ops_manager

·

export

BOSH_CLIENT_SECRET=<whatever-the command-returned-to-you>

·

export

BOSH_CA_CERT=/root/opsmanager.pem

·

export

BOSH_ENVIRONMENT=<whatever-the-BOSH-ip-returned-to-you>

Source your ~/.bash_profile so that the entries

take effect. Now we can run some PKS CLI commands to login to the UAA endpoint

configured during the PKS configuration phase.

·

pks login -a

uaa.rainpole.com -u admin -p -k

·

pks create-cluster

k8s-cluster-01 –external-hostname pks-cluster-01 –plan small

–num-nodes 3

Note that there are

two cluster references in the final command. The first, k8s-cluster-01, is how

PKS identifies the cluster. The second, pks-cluster-01, is simply a K8s thing –

essentially the expectation that there is some external load-balancer front-end

sitting in front of the K8s cluster. So once again, we will need to edit our

DNS and add this entry to coincide with the IP address of the K8s master node,

once it is deployed.The plan entry relates to the plans that we set up in PKS

earlier. One of the plas was labeled “small” which is what we have chosen here.

Lastly, the number of nodes refers to the number of K8s worker nodes. In this

example, there will be 3 workers, alongside the master.

Here is the output of

the create command:

root@pks-cli:~# pks create-cluster

k8s-cluster-01 –external-hostname pks-cluster-01 –plan small –num-nodes 3

Name: k8s-cluster-01

Plan Name: small

UUID:

72540a79-82b5-4aad-8e7a-0de6f6b058c0

Last Action: CREATE

Last Action State: in

progress

Last Action

Description: Creating cluster Kubernetes Master

Host: pks-cluster-01

Kubernetes Master

Port: 8443

Worker Instances: 3

Kubernetes Master

IP(s): In Progress

And while that is running, we can run the following BOSH

commands (assuming you have successfully run the om commands

above):

root@pks-cli:~# bosh

task

Using environment ‘10.27.51.181’ as client ‘ops_manager’

Using environment ‘10.27.51.181’ as client ‘ops_manager’

Task 404

Task 404 | 10:53:36 |

Preparing deployment: Preparing deployment (00:00:06)

Task 404 | 10:53:54 | Preparing package compilation: Finding packages to compile (00:00:00)

Task 404 | 10:53:54 | Creating missing vms: master/a975a9f9-3f74-4f63-ae82-61daddbc78df (0)

Task 404 | 10:53:54 | Creating missing vms: worker/64a55231-cdb6-4ce5-b62c-83cc3b4b233d (0)

Task 404 | 10:53:54 | Creating missing vms: worker/c3c9b49a-f89a-41e6-a1af-36ea0416f3c3 (1)

Task 404 | 10:53:54 | Creating missing vms: worker/e6fd330e-4e95-4d46-a937-676a05a32e5e (2) (00:00:59)

Task 404 | 10:54:54 | Creating missing vms: worker/c3c9b49a-f89a-41e6-a1af-36ea0416f3c3 (1) (00:01:00)

Task 404 | 10:54:58 | Creating missing vms: worker/64a55231-cdb6-4ce5-b62c-83cc3b4b233d (0) (00:01:04)

Task 404 | 10:55:00 | Creating missing vms: master/a975a9f9-3f74-4f63-ae82-61daddbc78df (0) (00:01:06)

Task 404 | 10:55:00 | Updating instance master: master/a975a9f9-3f74-4f63-ae82-61daddbc78df (0) (canary) (00:02:03)

Task 404 | 10:57:03 | Updating instance worker: worker/64a55231-cdb6-4ce5-b62c-83cc3b4b233d (0) (canary) (00:01:25)

Task 404 | 10:58:28 | Updating instance worker: worker/c3c9b49a-f89a-41e6-a1af-36ea0416f3c3 (1) (00:01:28)

Task 404 | 10:59:56 | Updating instance worker: worker/e6fd330e-4e95-4d46-a937-676a05a32e5e (2) (00:01:28)

Task 404 | 10:53:54 | Preparing package compilation: Finding packages to compile (00:00:00)

Task 404 | 10:53:54 | Creating missing vms: master/a975a9f9-3f74-4f63-ae82-61daddbc78df (0)

Task 404 | 10:53:54 | Creating missing vms: worker/64a55231-cdb6-4ce5-b62c-83cc3b4b233d (0)

Task 404 | 10:53:54 | Creating missing vms: worker/c3c9b49a-f89a-41e6-a1af-36ea0416f3c3 (1)

Task 404 | 10:53:54 | Creating missing vms: worker/e6fd330e-4e95-4d46-a937-676a05a32e5e (2) (00:00:59)

Task 404 | 10:54:54 | Creating missing vms: worker/c3c9b49a-f89a-41e6-a1af-36ea0416f3c3 (1) (00:01:00)

Task 404 | 10:54:58 | Creating missing vms: worker/64a55231-cdb6-4ce5-b62c-83cc3b4b233d (0) (00:01:04)

Task 404 | 10:55:00 | Creating missing vms: master/a975a9f9-3f74-4f63-ae82-61daddbc78df (0) (00:01:06)

Task 404 | 10:55:00 | Updating instance master: master/a975a9f9-3f74-4f63-ae82-61daddbc78df (0) (canary) (00:02:03)

Task 404 | 10:57:03 | Updating instance worker: worker/64a55231-cdb6-4ce5-b62c-83cc3b4b233d (0) (canary) (00:01:25)

Task 404 | 10:58:28 | Updating instance worker: worker/c3c9b49a-f89a-41e6-a1af-36ea0416f3c3 (1) (00:01:28)

Task 404 | 10:59:56 | Updating instance worker: worker/e6fd330e-4e95-4d46-a937-676a05a32e5e (2) (00:01:28)

Task 404 Started Tue

Apr 24 10:53:36 UTC 2018

Task 404 Finished Tue Apr 24 11:01:24 UTC 2018

Task 404 Duration 00:07:48

Task 404 done

Task 404 Finished Tue Apr 24 11:01:24 UTC 2018

Task 404 Duration 00:07:48

Task 404 done

Succeeded

root@pks-cli:~# bosh

vms

Using environment ‘10.27.51.181’ as client ‘ops_manager’

Using environment ‘10.27.51.181’ as client ‘ops_manager’

Task 409

Task 410

Task 409 done

Task 410

Task 409 done

Task 410 done

Deployment

‘pivotal-container-service-e7febad16f1bf59db116’

Instance Process State

AZ IPs VM CID VM Type Active

pivotal-container-service/d4a0fd19-e9ce-47a8-a7df-afa100a612fa running CH-AZ 10.27.51.182 vm-54d92a19-8f98-48a8-bd2e-c0ac53f6ad70 micro false

pivotal-container-service/d4a0fd19-e9ce-47a8-a7df-afa100a612fa running CH-AZ 10.27.51.182 vm-54d92a19-8f98-48a8-bd2e-c0ac53f6ad70 micro false

1 vms

Instance Process State

AZ IPs VM CID VM Type Active

master/a975a9f9-3f74-4f63-ae82-61daddbc78df running CH-AZ 10.27.51.185 vm-1e239504-c1d5-46c0-85fc-f5c02bbfddb1 medium false

worker/64a55231-cdb6-4ce5-b62c-83cc3b4b233d running CH-AZ 10.27.51.186 vm-a0452089-b2cc-426e-983c-08a442d15f46 medium false

worker/c3c9b49a-f89a-41e6-a1af-36ea0416f3c3 running CH-AZ 10.27.51.187 vm-54e3ff52-a9a0-450a-86f4-e176afdb47ff medium false

worker/e6fd330e-4e95-4d46-a937-676a05a32e5e running CH-AZ 10.27.51.188 vm-fadafe3f-331a-4844-a947-2390c71a6296 medium false

master/a975a9f9-3f74-4f63-ae82-61daddbc78df running CH-AZ 10.27.51.185 vm-1e239504-c1d5-46c0-85fc-f5c02bbfddb1 medium false

worker/64a55231-cdb6-4ce5-b62c-83cc3b4b233d running CH-AZ 10.27.51.186 vm-a0452089-b2cc-426e-983c-08a442d15f46 medium false

worker/c3c9b49a-f89a-41e6-a1af-36ea0416f3c3 running CH-AZ 10.27.51.187 vm-54e3ff52-a9a0-450a-86f4-e176afdb47ff medium false

worker/e6fd330e-4e95-4d46-a937-676a05a32e5e running CH-AZ 10.27.51.188 vm-fadafe3f-331a-4844-a947-2390c71a6296 medium false

4 vms

Succeeded

root@pks-cli:~#

root@pks-cli:~#

root@pks-cli:~# pks

clusters

Name Plan Name UUID

Status Action

k8s-cluster-01 small 72540a79-82b5-4aad-8e7a-0de6f6b058c0 succeeded CREATE

k8s-cluster-01 small 72540a79-82b5-4aad-8e7a-0de6f6b058c0 succeeded CREATE

root@pks-cli:~# pks

cluster k8s-cluster-01

Name: k8s-cluster-01

Plan Name: small

UUID: 72540a79-82b5-4aad-8e7a-0de6f6b058c0

Last Action: CREATE

Last Action State: succeeded

Last Action Description: Instance provisioning completed

Kubernetes Master Host: pks-cluster-01

Kubernetes Master Port: 8443

Worker Instances: 3

Kubernetes Master IP(s): 10.27.51.185

Plan Name: small

UUID: 72540a79-82b5-4aad-8e7a-0de6f6b058c0

Last Action: CREATE

Last Action State: succeeded

Last Action Description: Instance provisioning completed

Kubernetes Master Host: pks-cluster-01

Kubernetes Master Port: 8443

Worker Instances: 3

Kubernetes Master IP(s): 10.27.51.185

cormac@pks-cli:~$

The canary steps are

interesting. This is where it creates a test node with the new

components/software and if that all works, we can use the new node in place of

the old node rather than impact running environment. You will see one for the

master, and one for the workers. If the worker one is successful, then we know

it will work for all workers so no need to repeat it for all workers.

The very last command

has returned the IP address of the master. We can now add the DNS entry for the

pks-cluster-01 with this IP address.

9. Using kubectl

Excellent – now you

have your Kubernetes cluster deployed. We also have a K8s CLI utility called

kubectl, so let’s run a few commands and examine our cluster. First, we will

need to authenticate. We can do that with the following command:

root@pks-cli:~# pks

get-credentials k8s-cluster-01

Fetching credentials

for cluster k8s-cluster-01.

Context set for cluster k8s-cluster-01.

Context set for cluster k8s-cluster-01.

You can now switch

between clusters by using:

$kubectl config use-context <cluster-name>

$kubectl config use-context <cluster-name>

root@pks-cli:~# kubectl

config use-context k8s-cluster-01

Switched to context “k8s-cluster-01”.

root@pks-cli:~#

Switched to context “k8s-cluster-01”.

root@pks-cli:~#

You can now start

using kubectl commands to examine the state of your cluster.

root@pks-cli:~# kubectl

get nodes

NAME STATUS ROLES AGE VERSION

2ca99275-244c-4e21-952a-a2fb3586963e Ready <none> 15m v1.9.2

7bdaac2e-272e-45ae-808c-ada0eafbb967 Ready <none> 18m v1.9.2

a3d76bbe-31da-4531-9e5d-72bdbebb9b96 Ready <none> 16m v1.9.2

NAME STATUS ROLES AGE VERSION

2ca99275-244c-4e21-952a-a2fb3586963e Ready <none> 15m v1.9.2

7bdaac2e-272e-45ae-808c-ada0eafbb967 Ready <none> 18m v1.9.2

a3d76bbe-31da-4531-9e5d-72bdbebb9b96 Ready <none> 16m v1.9.2

root@pks-cli:~# kubectl

get nodes -o wide

NAME STATUS ROLES AGE VERSION EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

2ca99275-244c-4e21-952a-a2fb3586963e Ready <none> 15m v1.9.2 10.27.51.188 Ubuntu 14.04.5 LTS 4.4.0-116-generic docker://1.13.1

7bdaac2e-272e-45ae-808c-ada0eafbb967 Ready <none> 18m v1.9.2 10.27.51.186 Ubuntu 14.04.5 LTS 4.4.0-116-generic docker://1.13.1

a3d76bbe-31da-4531-9e5d-72bdbebb9b96 Ready <none> 17m v1.9.2 10.27.51.187 Ubuntu 14.04.5 LTS 4.4.0-116-generic docker://1.13.1

NAME STATUS ROLES AGE VERSION EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

2ca99275-244c-4e21-952a-a2fb3586963e Ready <none> 15m v1.9.2 10.27.51.188 Ubuntu 14.04.5 LTS 4.4.0-116-generic docker://1.13.1

7bdaac2e-272e-45ae-808c-ada0eafbb967 Ready <none> 18m v1.9.2 10.27.51.186 Ubuntu 14.04.5 LTS 4.4.0-116-generic docker://1.13.1

a3d76bbe-31da-4531-9e5d-72bdbebb9b96 Ready <none> 17m v1.9.2 10.27.51.187 Ubuntu 14.04.5 LTS 4.4.0-116-generic docker://1.13.1

root@pks-cli:~# kubectl

get pods

No resources found.

No resources found.

The reason I have no

pods is that in this deployment, I omitted the “Enable Post Deploy Scripts”

option when setting up the Director initially. If I had checked this, I would

have the K8s dashboard running automatically. No dig deal – I can deploy it

manually.

root@pks-cli:~# kubectl

create -f

https://raw.githubusercontent.com/kubernetes/dashboard/master/src/deploy/recommended/kubernetes-dashboard.yaml

secret “kubernetes-dashboard-certs” created

serviceaccount “kubernetes-dashboard” created

role “kubernetes-dashboard-minimal” created

rolebinding “kubernetes-dashboard-minimal” created

deployment “kubernetes-dashboard” created

service “kubernetes-dashboard” created

secret “kubernetes-dashboard-certs” created

serviceaccount “kubernetes-dashboard” created

role “kubernetes-dashboard-minimal” created

rolebinding “kubernetes-dashboard-minimal” created

deployment “kubernetes-dashboard” created

service “kubernetes-dashboard” created

root@pks-cli:~# kubectl

get pods –all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system kubernetes-dashboard-5bd6f767c7-z2pql 1/1 Running 0 2m

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system kubernetes-dashboard-5bd6f767c7-z2pql 1/1 Running 0 2m

root@pks-cli:~# kubectl

get pods –all-namespaces -o wide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE

kube-system kubernetes-dashboard-5bd6f767c7-z2pql 1/1 Running 0 3m 10.200.22.2 a3d76bbe-31da-4531-9e5d-72bdbebb9b96

root@pks-cli:~#

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE

kube-system kubernetes-dashboard-5bd6f767c7-z2pql 1/1 Running 0 3m 10.200.22.2 a3d76bbe-31da-4531-9e5d-72bdbebb9b96

root@pks-cli:~#

Let’s run another

application as well – a simple hello-world app.

root@pks-cli:~# kubectl

run hello-node –image gcr.io/google-samples/node-hello:1.0

deployment “hello-node” created

deployment “hello-node” created

root@pks-cli:~# kubectl

get pods –all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

default hello-node-6c59d566d6-85m5s 0/1 ContainerCreating 0 2s

kube-system kubernetes-dashboard-5bd6f767c7-z2pql 1/1 Running 0 30m

NAMESPACE NAME READY STATUS RESTARTS AGE

default hello-node-6c59d566d6-85m5s 0/1 ContainerCreating 0 2s

kube-system kubernetes-dashboard-5bd6f767c7-z2pql 1/1 Running 0 30m

root@pks-cli:~# kubectl

get pods –all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

default hello-node-6c59d566d6-85m5s 1/1 Running 0 3m

kube-system kubernetes-dashboard-5bd6f767c7-z2pql 1/1 Running 0 30m

NAMESPACE NAME READY STATUS RESTARTS AGE

default hello-node-6c59d566d6-85m5s 1/1 Running 0 3m

kube-system kubernetes-dashboard-5bd6f767c7-z2pql 1/1 Running 0 30m

And now we have the K8s dashboard running. Now, we are not able

to point directly at our master node to access this dashboard due to

authorization restrictions. However William once again saves the day with this steps on how to access the K8s

dashboard via a tunnel and the kubectl proxy. Once you have

connected to the dashboard and uploaded your K8s config file for the PKS

client, you should now be able to access the K8s dashboard and see any apps

that you have deployed (in my case, the simple hello app).

There you have

it. Now you have infrastructure in place to allow you to very simply and very

simply deploy K8s clusters for your developers. I’ll follow-up with a post on

some of the challenges I met, especially with the networking, as this should

help anyone looking to roll this out in production. But for now, I think this

post is already long enough. Thanks for reading to the end. And kudos once more

to William Lam and his great blog which provided a lot of guidance on how to

successfully deploy PKS and K8s.

Comments

Post a Comment